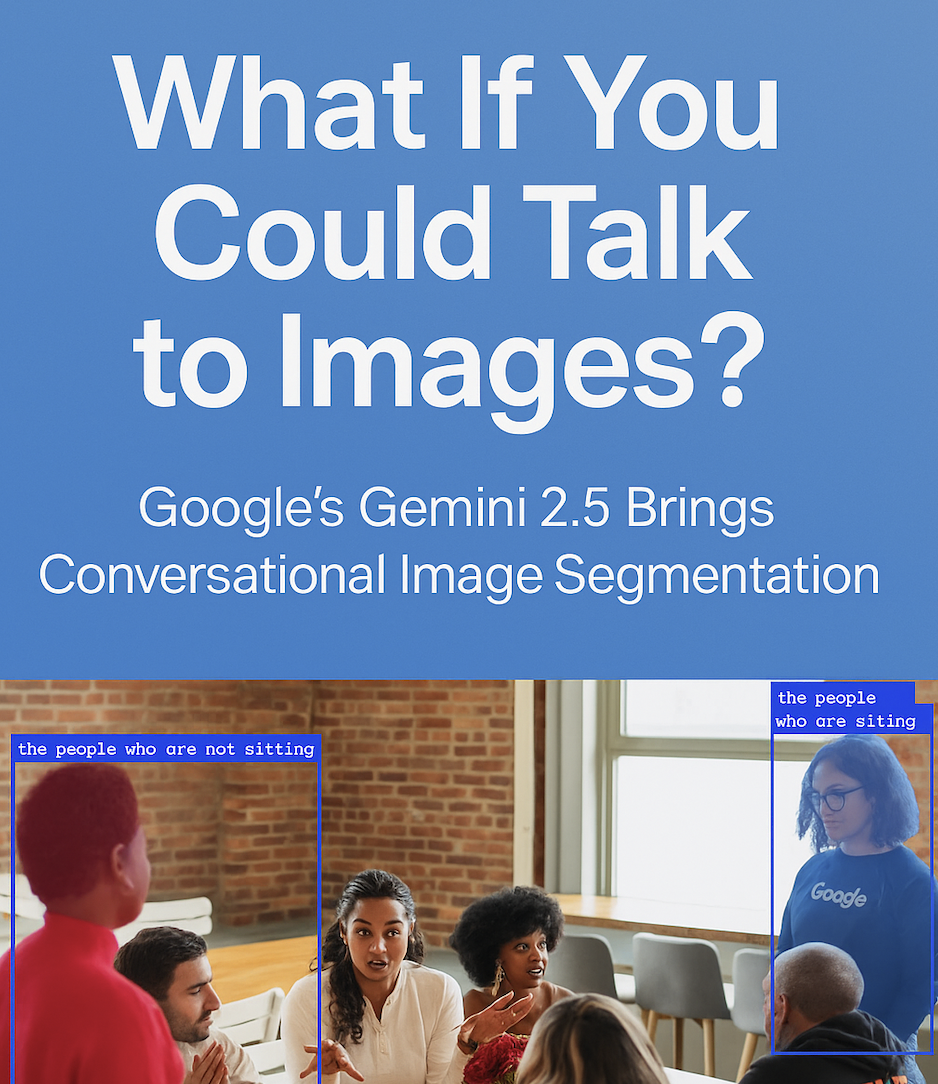

We’ve trained machines to see. Then we trained them to understand what they see. Now, we’re training them to listen to us talk about what we see — and act on it.

Enter Google Gemini 2.5’s Conversational Image Segmentation — a breakthrough that blends natural language understanding with vision intelligence to let users interact with images using plain, human conversation.

No complex UI. No fixed labels. No training needed.

Just say what you want to find in an image, and it finds it — accurately, intuitively, and contextually.

So, What Exactly Is Conversational Image Segmentation?

It’s the ability for an AI to:

- Understand what you’re saying in natural language.

- Apply that understanding to a visual image.

- Return a segmented mask (a pixel-level highlight) of exactly what you described.

It works like this:

You: “Highlight the food that contains meat.”

Gemini: [Returns image with only the meat dishes segmented, ignoring the salad and dessert.]

The Game-Changing Capabilities of Gemini 2.5

Let’s break down what makes Gemini’s vision model uniquely powerful in the world of image analysis:

- Relational ReasoningUnderstands spatial and contextual relationships:

- “The third person from the left holding a red flag.”

- Conditional FilteringHandles logic and filters with ease:

- “Show people not wearing helmets.”

- “Only highlight blue cars that are parked correctly.”

- Abstract Concept RecognitionCan understand messy, real-world ideas:

- “Mess,” “Damage,” “Opportunity,” “Neglect.”

- In-Image Text (OCR)Reads and reasons with visible text:

- “Select the dish labeled ‘vegan’.”

- “Highlight all labels with the word ‘hazard’.”

- Multilingual PromptingYou can talk to it in your own language:

- “Marque todos los productos vencidos.” (Spanish: “Mark all expired products.”)

- “Zeige alle offenen Türen.” (German: “Show all open doors.”)

- Developer-Ready OutputProvides outputs like:

- JSON masks

- Bounding boxes

- Probabilistic mapsThis integrates into any frontend or backend with minimal code.

How This Technology Transforms Real-World Jobs Across Industries

Now that we know what Gemini can do, let’s see who benefits and how.

Software Developers: “Build Smarter Apps, Faster.”

Use segmentation in creative apps, AR, or image editors. Turn static documents into interactive ones. Use prompt-driven UI without custom ML models.

Prompt Example: “Segment the person wearing the hat and holding a tool.”

Project Managers: “Show, Don’t Just Tell.”

Visually track progress on-site or in product builds. Annotate updates with AI, no design help needed. Identify delays or missing components at a glance.

Prompt Examples:

- “Highlight all unfinished wall sections.”

- “Show changes from last week’s image.”

Finance Workers: “Find the Numbers Faster.”

Extract data from receipts, checks, or tables. Identify fraud, errors, or inconsistencies. Automate auditing workflows.

Prompt Examples:

- “Highlight all amounts over $5,000.”

- “Segment overdue line items.”

Wealth Managers: “Visual Storytelling for Finance.”

Enhance client reports with dynamic visuals. Explain investment allocations visually. Filter portfolios with conversational prompts.

Prompt Examples:

- “Highlight all assets that lost value this quarter.”

- “Show only ESG-compliant holdings.”

Data Analysts: “From Pixels to Insights.”

Analyze satellite images, product photos, or dashboards. Detect defects, anomalies, or patterns without pre-tagging. Feed image insights into BI tools or models.

Prompt Examples:

- “Segment all damaged rooftops in this image.”

- “Show all packages with torn labels.”

Teachers & Educators: “Bring Lessons to Life.”

Turn any image into a quiz, diagram, or explanation. Use visual prompts in any language. Great for science, geography, history, and more.

Prompt Examples:

- “Highlight all renewable energy sources.”

- “Circle the rivers on this map.”

Chefs & Culinary Teams: “Visual Prep and Safety in Seconds.”

Check kitchen compliance visually. Highlight improperly stored ingredients. Identify dish components with precision.

Prompt Examples:

- “Segment all utensils not in their correct places.”

- “Show ingredients that contain dairy.”

Compliance & Safety Officers: “AI-Powered Inspections.”

Detect PPE violations, hazards, and non-compliance. Automatically tag violations in images or reports. Monitor safety visually — even at scale.

Prompt Examples:

- “Highlight people not wearing safety goggles.”

- “Segment fire exits that are blocked.”

TL;DR: One Tool, Endless Applications

| Role | What Gemini 2.5 Enables |

| Developers | Build apps with smart vision, no ML needed |

| Project Managers | Visual progress tracking and communication |

| Finance Workers | Audit and extract financial info from documents |

| Wealth Managers | Visualize portfolios and explain performance |

| Data Analysts | Analyze image-based data at scale |

| Teachers | Create interactive, visual learning materials |

| Chefs | Ensure kitchen safety and prep standards |

| Compliance Officers | Detect safety violations in real-time |

Final Thought: From “What’s in This Image?” to “What Does It Mean?”

Gemini 2.5 doesn’t just see. It listens. It reasons. And it helps you act — whether you’re managing a warehouse, grading an assignment, or preparing baklava.

If your job touches images, photos, diagrams, or documents — this tech can redefine how you work.

You can try it in Google AI Studio or explore the developer API. Imagine the possibilities when you simply talk to your next image and see what it tells you.

Leave a Reply